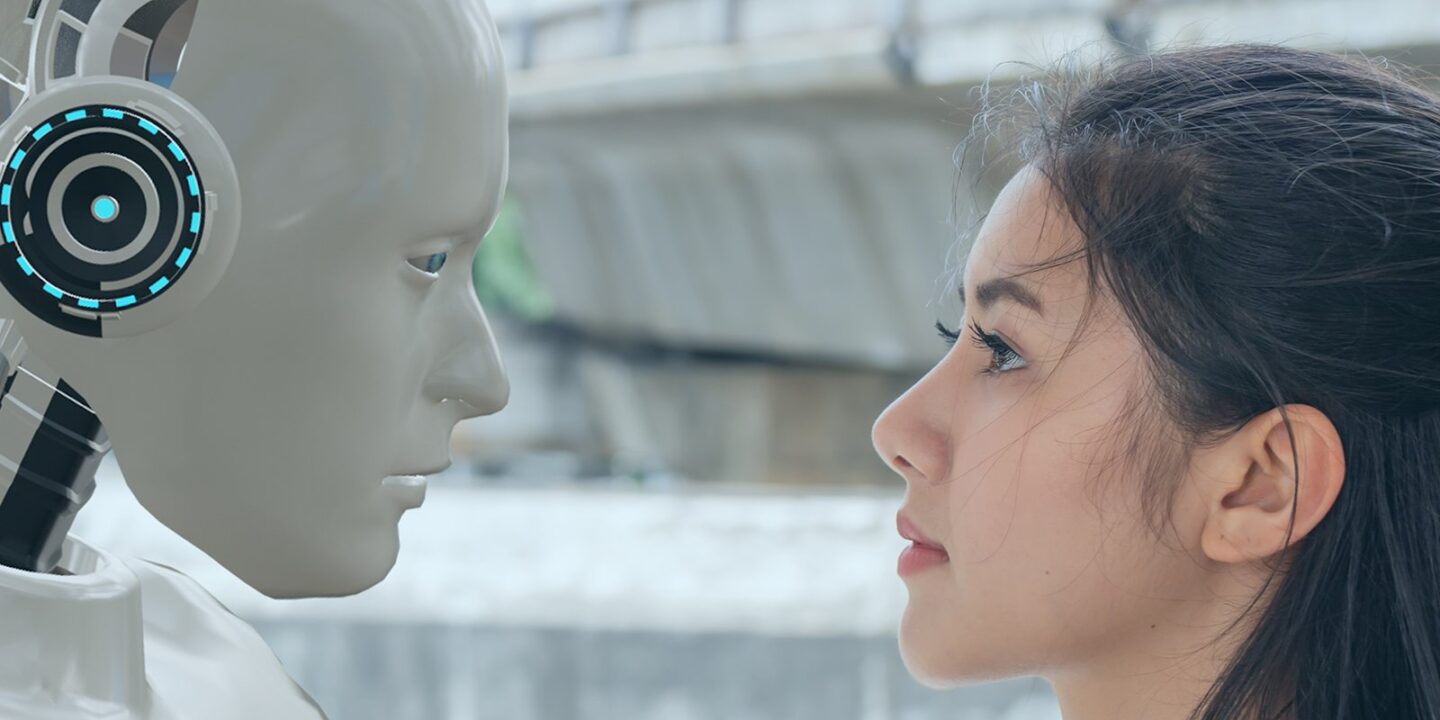

Artificial intelligence (AI) has made tremendous strides in recent years, not only in performing tasks traditionally handled by humans but also in understanding and responding to human emotions. This ability to perceive, interpret, and react to emotional cues is pushing AI beyond logical, task-based applications, introducing more nuanced and human-like interactions. From virtual assistants to mental health apps, AI is increasingly being designed to understand emotional states, making our interactions with machines more intuitive, empathetic, and dynamic. In this article, we explore the advancements in AI’s ability to read, understand, and respond to human emotions, focusing on the roles of natural language processing (NLP) and facial recognition technologies.

1. Natural Language Processing: Understanding Emotions Through Words

Natural language processing (NLP) is a branch of AI that focuses on enabling machines to understand and generate human language. Over the past decade, NLP technologies have evolved from basic command-response systems to advanced models capable of grasping context, tone, and even underlying emotions in conversations.

How NLP Understands Emotions:

- Sentiment Analysis: One of the core ways NLP systems detect emotions is through sentiment analysis, which involves analyzing the sentiment behind written or spoken words. By assessing the polarity (positive, negative, neutral) of a sentence, NLP systems can gauge emotional undertones. For example, a sentence like “I am so frustrated with this situation” would trigger a response recognizing negative emotions such as anger or frustration.

- Tone Detection: Advanced NLP models can also detect tone and intent in a speaker’s words. By evaluating the structure of the language—such as word choice, punctuation, and rhythm—AI systems can identify whether the tone is sarcastic, anxious, excited, or sad. For example, a conversational AI might discern the difference between “I’m fine” said in a neutral tone and “I’m fine” spoken with a dismissive or agitated tone.

- Contextual Understanding: NLP models like GPT-4 and BERT have significantly improved the ability to understand the context of a conversation, recognizing not only the words spoken but also the broader emotional context. This allows AI to engage in more meaningful, empathetic conversations. For instance, AI-powered chatbots are now able to understand the emotional state of a user and provide responses that align with their feelings, whether offering empathy, encouragement, or even humor.

Applications of NLP in Emotional AI:

- Customer Service: Many businesses use AI-powered customer service chatbots to interact with customers, and these bots are increasingly trained to detect frustration or dissatisfaction through sentiment analysis. By recognizing when a customer is upset, the AI can escalate the conversation to a human agent or adapt its responses to better address the customer’s emotional needs.

- Mental Health Support: AI is being used in mental health applications, such as AI-driven therapy apps (e.g., Woebot), that analyze text input from users and offer emotional support. By recognizing signs of anxiety, depression, or distress, these AI tools can provide coping mechanisms or suggest when a person should reach out for professional help.

2. Facial Recognition and Emotion Detection

Another key area of development in AI’s understanding of human emotions is facial recognition. By analyzing facial expressions, AI can gain insights into a person’s emotional state, enabling machines to respond appropriately to non-verbal cues. Facial recognition technologies have become much more sophisticated and are now capable of detecting a wide range of emotions, from happiness and surprise to sadness, anger, and fear.

How Facial Recognition Detects Emotions:

- Expression Analysis: AI uses facial landmarks, such as the movement of the eyebrows, mouth, and eyes, to analyze expressions and infer emotions. For example, a raised eyebrow might indicate surprise or curiosity, while a furrowed brow could signal confusion or anger. AI systems analyze these movements, often using machine learning models trained on large datasets of facial expressions.

- Real-Time Emotion Detection: With the help of cameras and advanced algorithms, AI systems can process facial expressions in real time, providing immediate feedback. This is particularly useful in applications like customer service, where understanding a person’s mood can greatly enhance the interaction. For example, a virtual agent might adjust its tone or offer more empathetic responses if the system detects signs of distress or frustration.

- Multimodal Emotion Recognition: In some systems, facial recognition is combined with voice analysis and text sentiment analysis to provide a more comprehensive understanding of a person’s emotional state. For instance, an AI system can use both the speaker’s tone of voice and facial expressions to gauge the intensity of the emotions they are expressing, improving the machine’s ability to respond empathetically.

Applications of Facial Recognition in Emotional AI:

- Healthcare: AI systems are being used in clinical settings to monitor patients’ emotional well-being. For example, AI-powered cameras in hospitals or therapy sessions can track patients’ facial expressions, helping doctors or therapists identify signs of anxiety, depression, or pain that might not be verbally communicated.

- Retail and Marketing: Facial recognition is also being used in retail environments to enhance customer experiences. By detecting customer emotions during shopping, AI systems can tailor product recommendations, personalize offers, and improve customer service in real-time, leading to better consumer satisfaction.

- Security and Safety: AI can also improve public safety by identifying emotional cues that might signal distress or danger. For instance, surveillance systems powered by facial recognition and emotion-detection algorithms can be used to identify individuals who might be in distress, such as those displaying fear or anxiety, potentially triggering appropriate interventions.

3. Challenges and Ethical Considerations

While AI’s ability to understand and respond to human emotions has made significant strides, it also raises several important challenges and ethical questions.

Challenges:

- Accuracy: Emotional AI systems, while improving, are still not perfect. Recognizing emotions from facial expressions or text alone can be challenging, as emotions are often complex, nuanced, and context-dependent. For instance, sarcasm or mixed emotions may be difficult for AI to interpret correctly, leading to potential misunderstandings or inappropriate responses.

- Cultural Differences: Emotional expressions can vary significantly across cultures. A smile in one culture may indicate happiness, while in another, it may signal politeness or discomfort. AI systems need to account for these cultural differences in order to accurately interpret emotions on a global scale.

Ethical Concerns:

- Privacy: The use of facial recognition and emotion-detection technologies raises concerns about privacy. For instance, using AI to analyze individuals’ emotions in public spaces or via online platforms could be seen as an invasion of privacy if individuals have not consented to it. Governments and businesses must consider the ethical implications of deploying such technologies and ensure that personal data is handled responsibly.

- Manipulation and Bias: There is a risk that companies and governments may misuse emotion-detection AI for manipulative purposes, such as targeting vulnerable individuals with personalized ads or controlling behavior. Additionally, bias in emotion-recognition algorithms—if trained on non-representative datasets—can lead to inaccuracies and unfair treatment of certain demographic groups.

4. The Future of AI and Emotional Intelligence

The future of AI in human emotion recognition holds exciting potential. As AI systems become more sophisticated, their ability to understand and respond to emotions will likely improve. Here are some possibilities for the future:

- Deeper Emotional Intelligence: As AI models become more complex, they will be able to understand a broader range of emotions, including more subtle, complex feelings such as nostalgia or empathy. They could also learn to detect shifts in emotional states over time, enhancing long-term human-AI relationships.

- Personalized Emotional Support: AI could offer increasingly personalized emotional support, tailoring its responses based on a deep understanding of individual users’ emotional states, preferences, and histories.

- Integration with Augmented Reality (AR) and Virtual Reality (VR): Emotion-recognition AI could be integrated into AR/VR environments, creating more immersive and emotionally responsive experiences. In virtual spaces, AI could adapt to users’ emotional cues to provide real-time support or change the environment to suit their mood, enhancing both entertainment and therapeutic applications.

Conclusion: AI’s Emotional Future

AI’s ability to understand and respond to human emotions is a rapidly advancing field that is already transforming industries like healthcare, customer service, and entertainment. By harnessing technologies such as natural language processing and facial recognition, AI systems can engage in more empathetic, context-aware interactions with humans, enhancing experiences across a range of applications. However, as emotional AI continues to develop, it will be essential to navigate the ethical, cultural, and privacy concerns associated with this technology to ensure it is used responsibly and for the greater good. The future of AI is not just about smart machines—it’s about creating intelligent systems that can truly connect with the emotional world of humans.